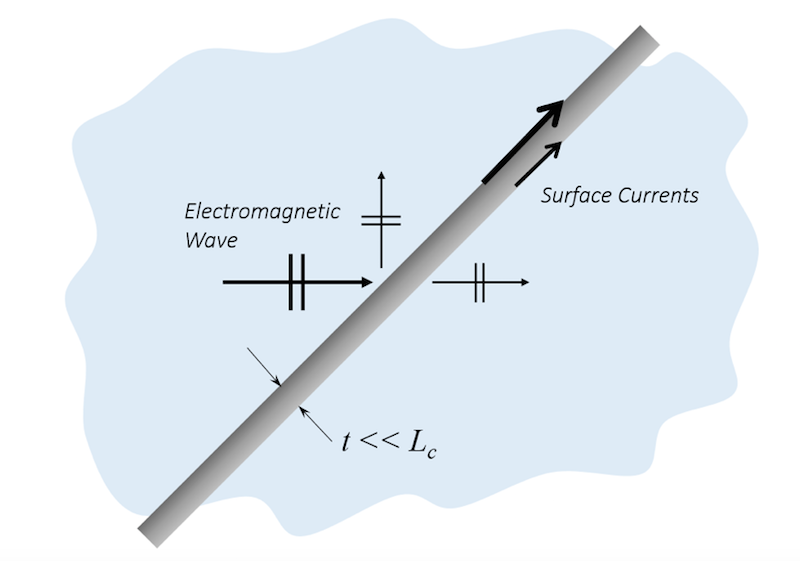

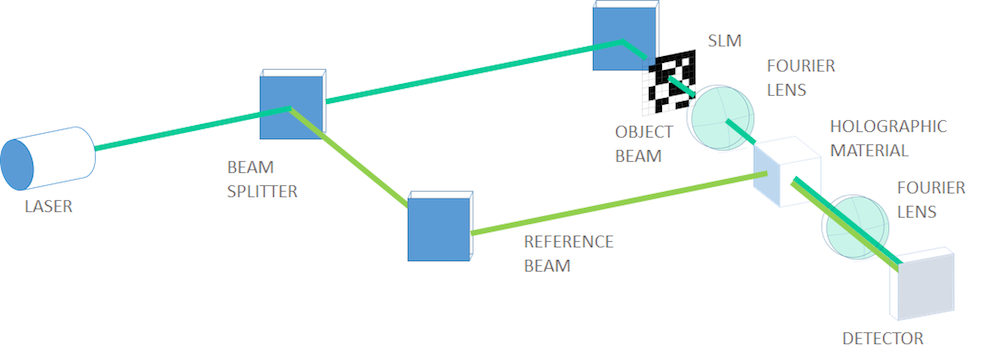

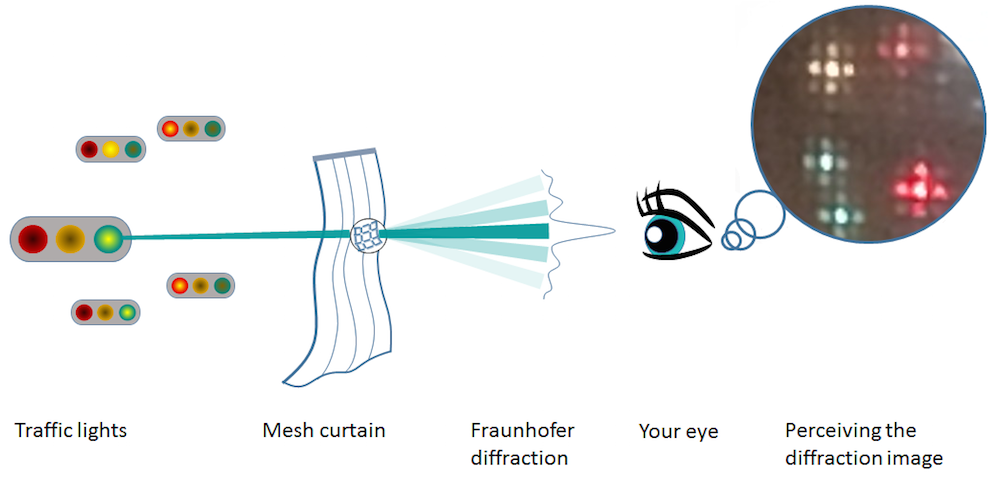

We often want to model an electromagnetic wave (light, microwaves) incident upon periodic structures, such as diffraction gratings, metamaterials, or frequency selective surfaces. This can be done using the RF or Wave Optics modules from the COMSOL product suite. Both modules provide Floquet periodic boundary conditions and periodic ports and compute the reflected and transmitted diffraction orders as a function of incident angles and wavelength. This blog post introduces the concepts behind this type of analysis and walks through the set-up of such problems.

The Scenario

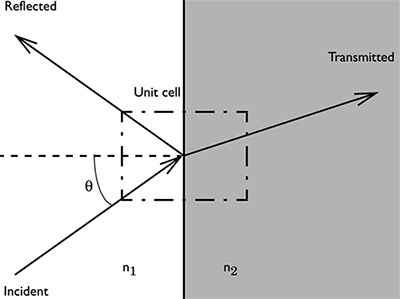

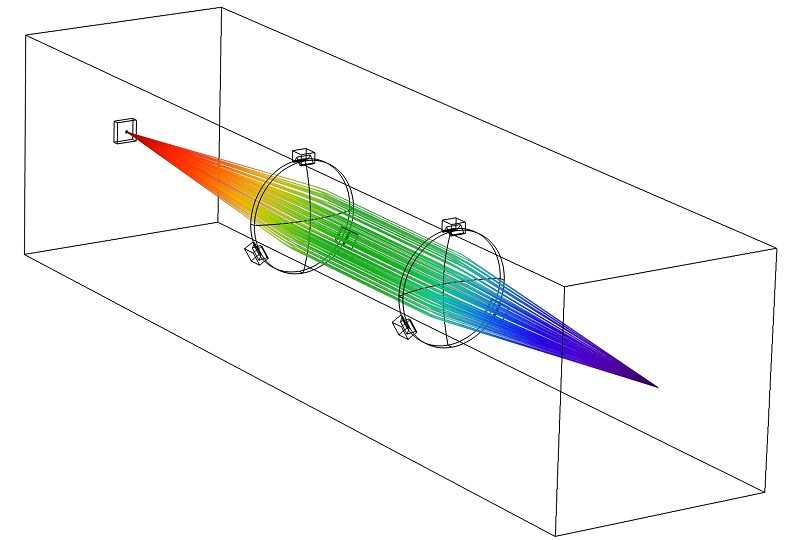

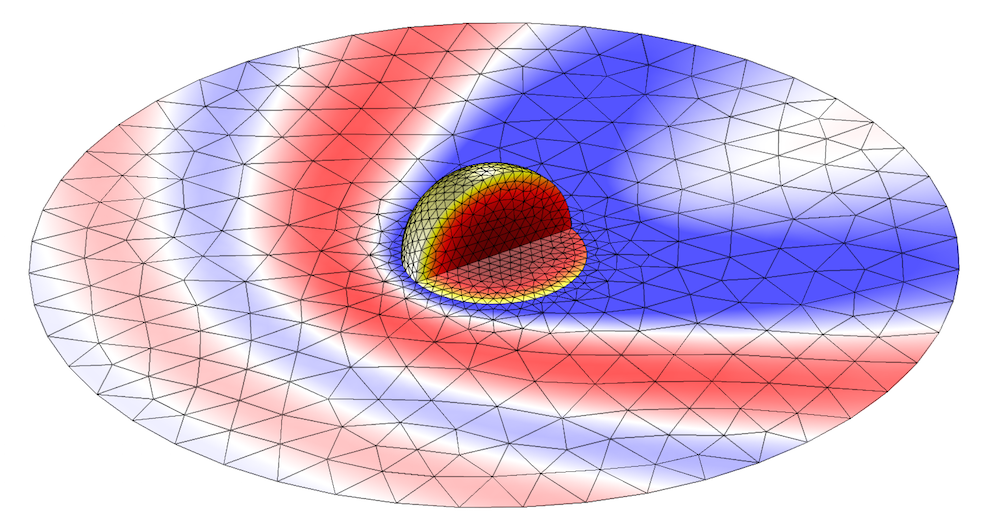

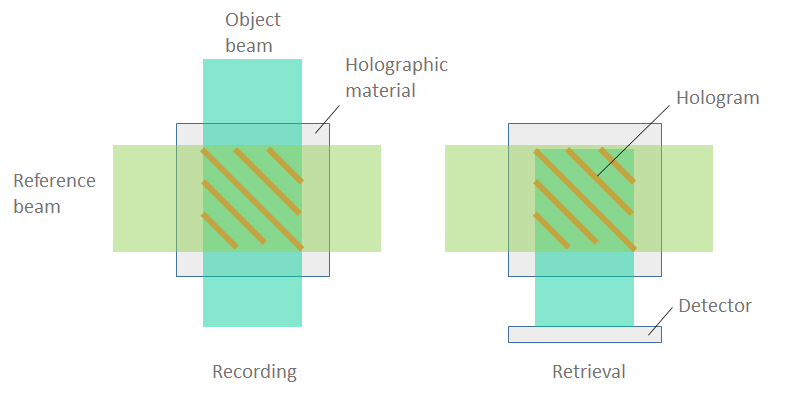

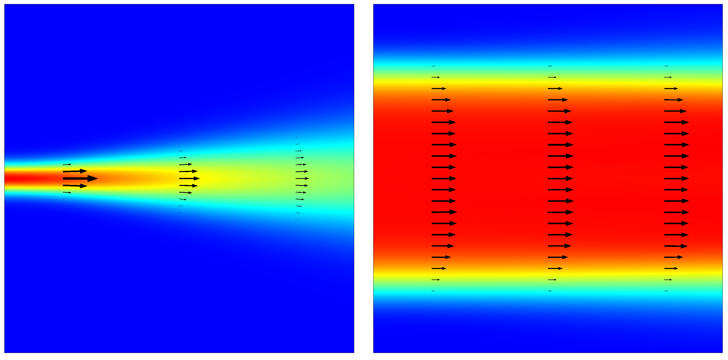

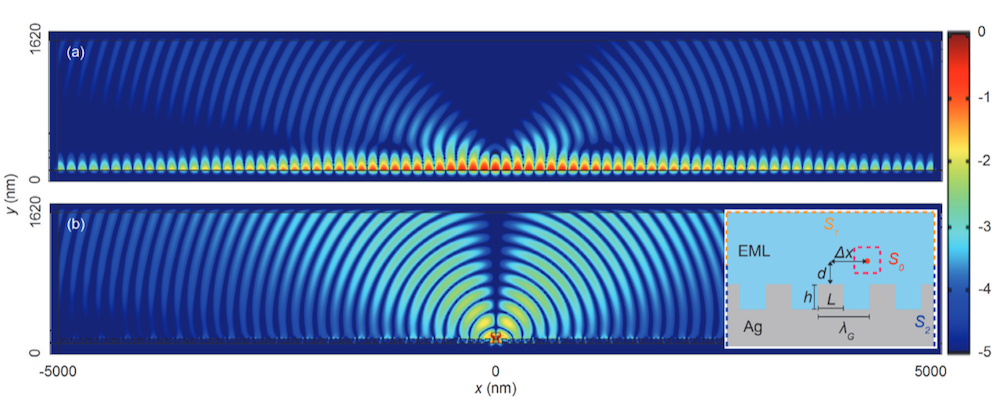

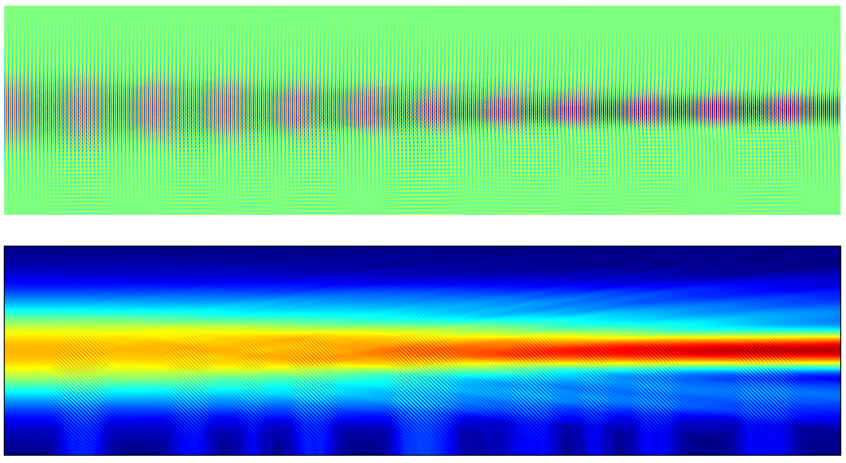

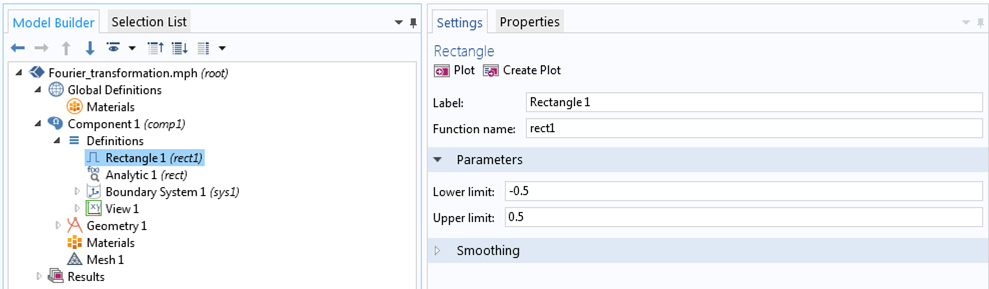

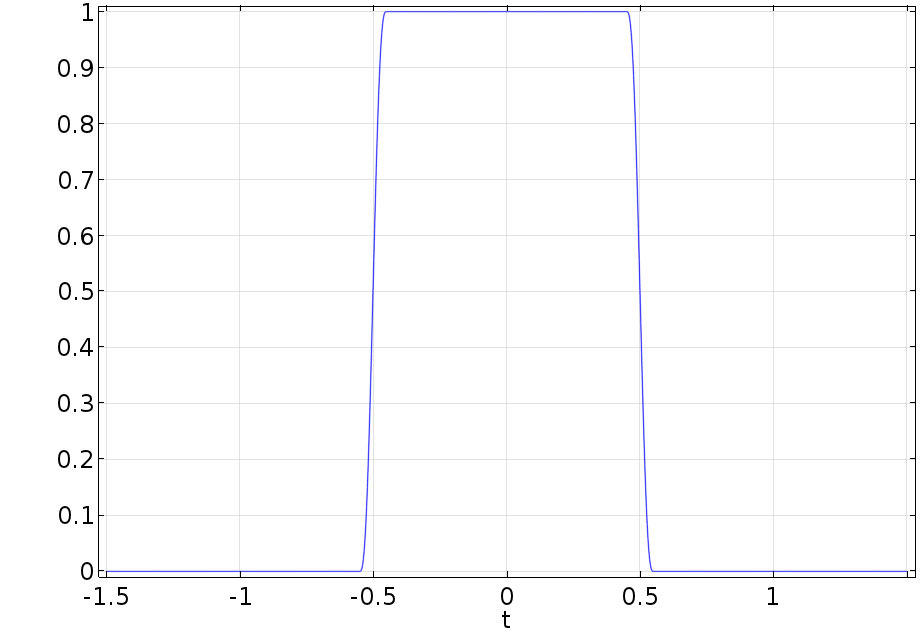

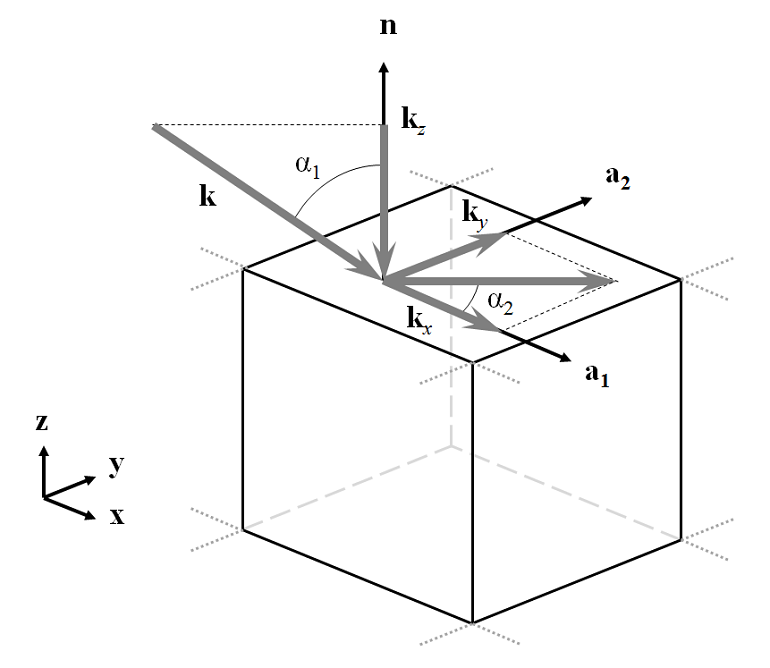

First, let’s consider a parallelepided volume of free space representing a periodically repeating unit cell with a plane wave passing through it at an angle, as shown below:

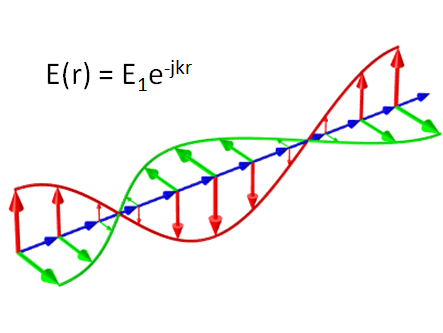

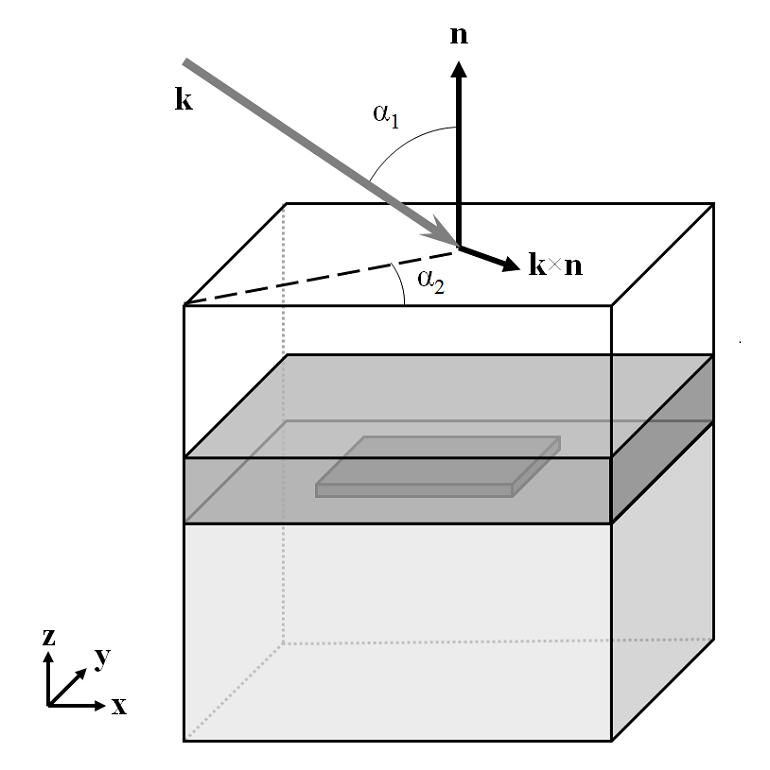

The incident wavevector, \bf{k}, has component magnitudes: k_x = k_0 \sin(\alpha_1) \cos(\alpha_2), k_y = k_0 \sin(\alpha_1) \sin(\alpha_2), and k_z = k_0 \cos(\alpha_1) in the global coordinate system. This problem can be modeled by using Periodic boundary conditions on the sides of the domain and Port boundary conditions at the top and bottom. The most complex part of the problem set-up is defining the direction and polarization of the incoming and outgoing wave.

Defining the Wave Direction

Although the COMSOL software is flexible enough to allow any definition of base coordinate system, in this posting, we will pick one and use it throughout. The direction of the incident light is defined by two angles, \alpha_1 and \alpha_2; and two vectors, \bf{n}, the outward pointing normal of the modeling space and \bf{a_1}, a vector in the plane of incidence. The convention we choose here is to align \bf{a_1} to the global x-axis and align \bf{n} with the global z-axis. Thus, the angle between the wavevector of the incoming wave and the global z-axis is \alpha_1, the elevation angle of incidence, where -\pi/2 > \alpha_1 > \pi/2 with \alpha_1 = 0, meaning normal incidence. The angle between the incident wavevector and the global x-axis is the azimuthal angle of incidence, \alpha_2, which lies in the range, -\pi/2 > \alpha_2 \geq \pi/2. As a consequence of this definition, positive values of both \alpha_1 and \alpha_2 imply that the wave is traveling in the positive x- and y-direction.

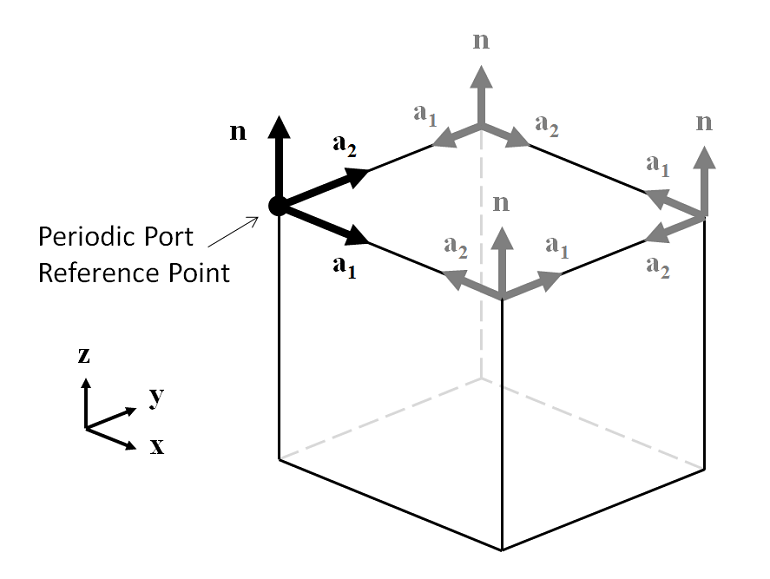

To use the above definition of direction of incidence, we need to specify the \bf{a_1} vector. This is done by picking a Periodic Port Reference Point, which must be one of the corner points of the incident port. The software uses the in-plane edges coming out of this point to define two vectors, \bf{a_1} and \bf{a_2}, such that \bf{a_1 \times a_2 = n}. In the figure below, we can see the four cases of \bf{a_1} and \bf{a_2} that satisfy this condition. Thus, the Periodic Port Reference Point on the incoming side port should be the point at the bottom left of the x-y plane, when looking down the z-axis and the surface. By choosing this point, the \bf{a_1} vector becomes aligned with the global x-axis.

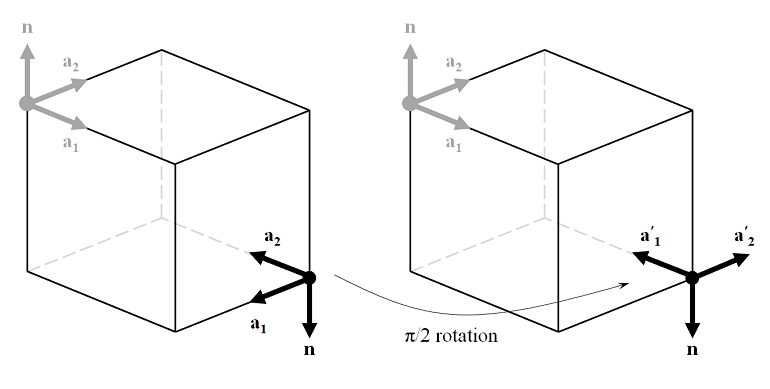

Now that \bf{a_1} and \bf{a_2} have been defined on the incident side due to the choice of the Periodic Port Reference Point, the port on the outgoing side of the modeling domain must also be defined. The normal vector, \bf{n}, points in the opposite direction, hence the choice of the Periodic Port Reference Point must be adjusted. None of the four corner points will give a set of \bf{a_1} and \bf{a_2} that align with the vectors on the incident side, so we must choose one of the four points and adjust our definitions of \alpha_1 and \alpha_2. By choosing a periodic port reference point on the output side that is diametrically opposite the point chosen on the input side and applying a \pi/2 rotation to \alpha_2, the direction of \bf{a_1} is rotated to \bf{a_1'}, which points in the opposite direction of \bf{a_1} on the incident side. As a consequence of this rotation, \alpha_1 and \alpha_2 are switched in sign on the output side of the modeling domain.

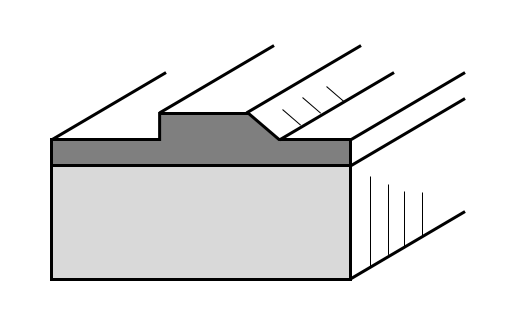

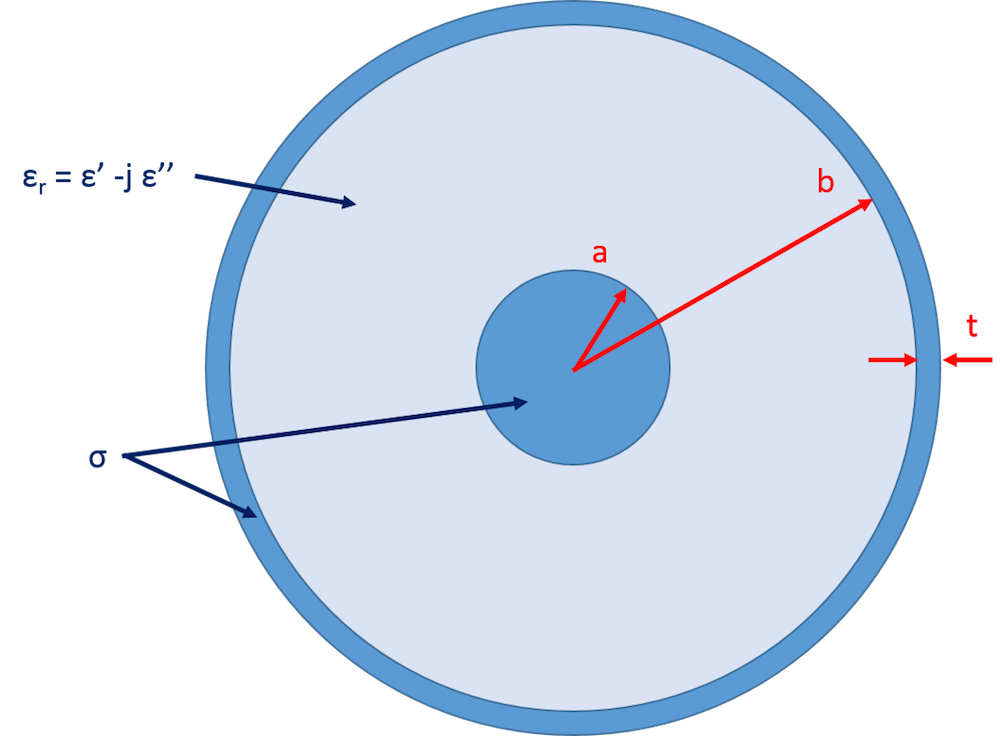

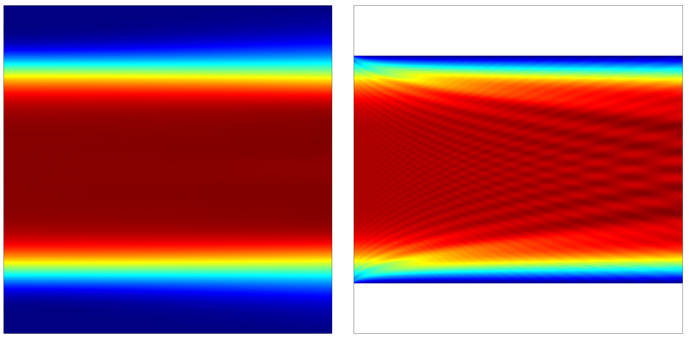

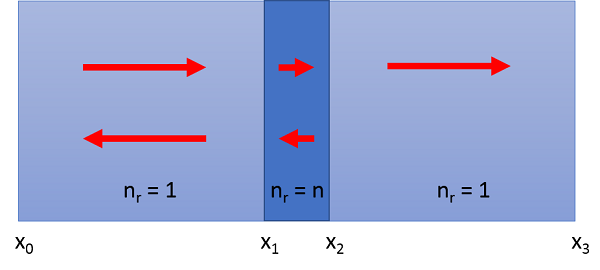

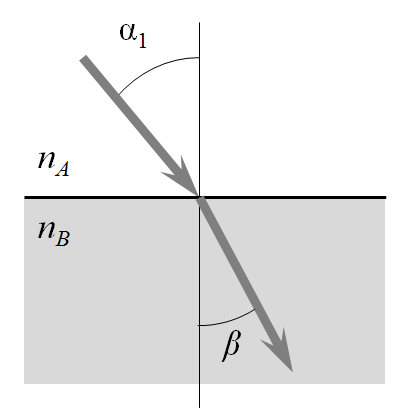

Next, consider a modeling domain representing a dielectric half-space with a refractive index contrast between the input and output port sides that causes the wave to change direction, as shown below. From Snell’s law, we know that the angle of refraction is \beta=\arcsin \left( n_A\sin(\alpha_1)/n_B \right). This lets us compute the direction of the wavevector at the output port. Also, note that this relationship holds even if there are additional layers of dielectric sandwiched between the two half-spaces.

In summary, to define the direction of a plane wave traveling through a unit cell, we first need to choose two points, the Periodic Port Reference Points, which are diametrically opposite on the input and output sides. These points define the vectors \bf{a_1} and \bf{a_2}. As a consequence, \alpha_1 and \alpha_2 on the input side can be defined with respect to the global coordinate system. On the output side, the direction angles become: \alpha_{1,out} = -\arcsin \left( n_A\sin(\alpha_1)/n_B \right) and \alpha_{2,out}=-\alpha_2 + \pi/2.

Defining the Polarization

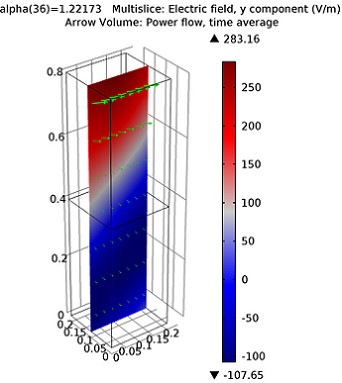

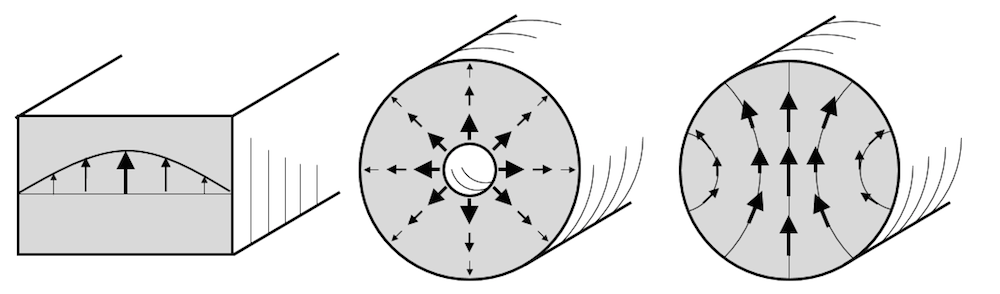

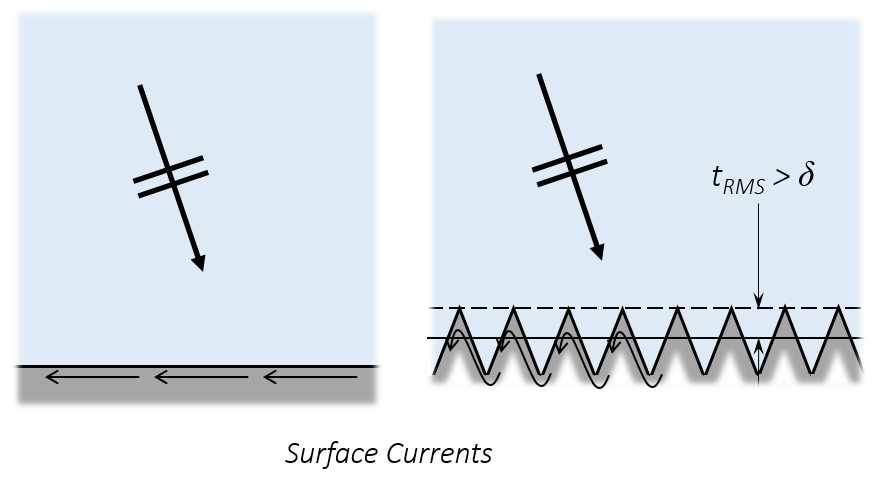

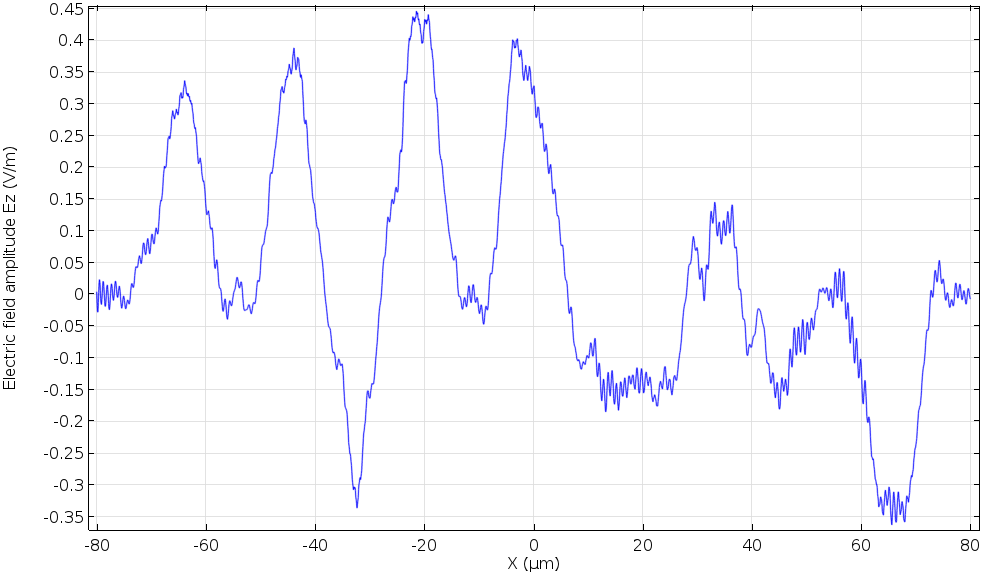

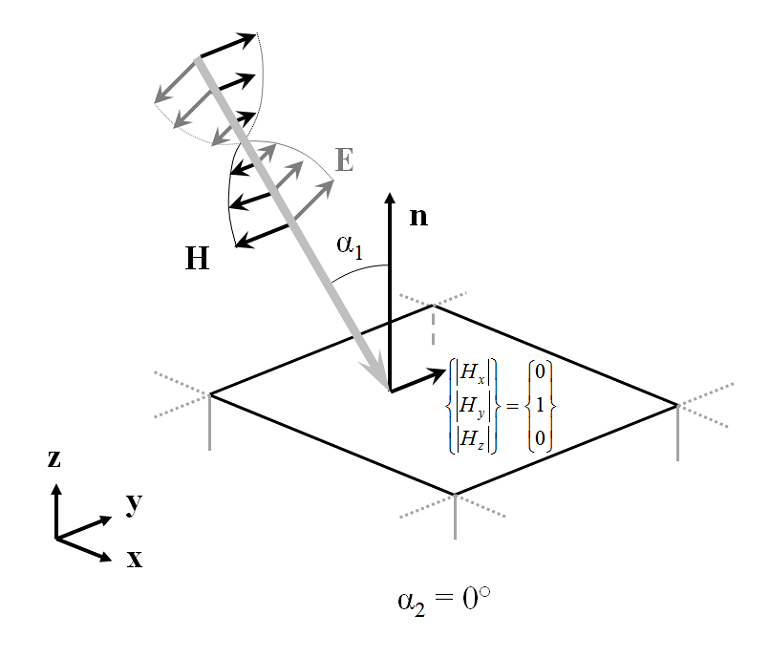

The incoming plane wave can be in one of two polarizations, with either the electric or the magnetic field parallel to the x-y plane. All other polarizations, such as circular or elliptical, can be constructed from a linear combination of these two. The figure below shows the case of \alpha_2 = 0, with the magnetic field parallel to the x-y plane. For the case of \alpha_2 = 0, the magnetic field amplitude at the input and output ports is (0,1,0) in the global coordinate system. As the beam is rotated such that \alpha_2 \ne 0, the magnetic field amplitude becomes (\sin(\alpha_2), \cos(\alpha_2),0). For the orthogonal polarization, the electric field magnitude at the input can be defined similarly. At the output port, the field components in the x-y plane can be defined in the same way.

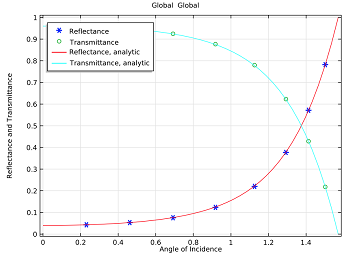

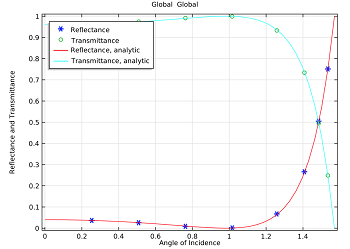

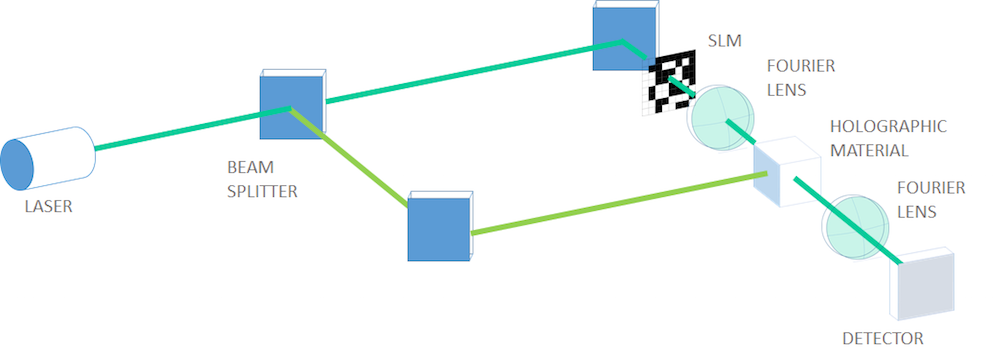

So far, we’ve seen how to define the direction and polarization of a plane wave that is propagating through a unit cell around a dielectric interface. You can see an example model of this in the Model Gallery that demonstrates an agreement with the analytically derived Fresnel Equations.

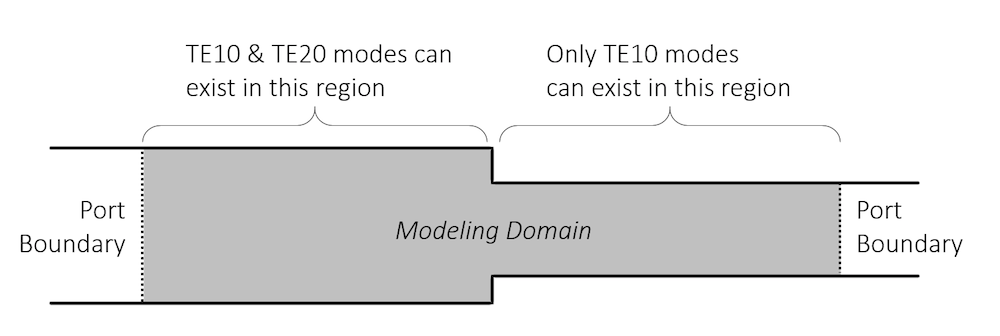

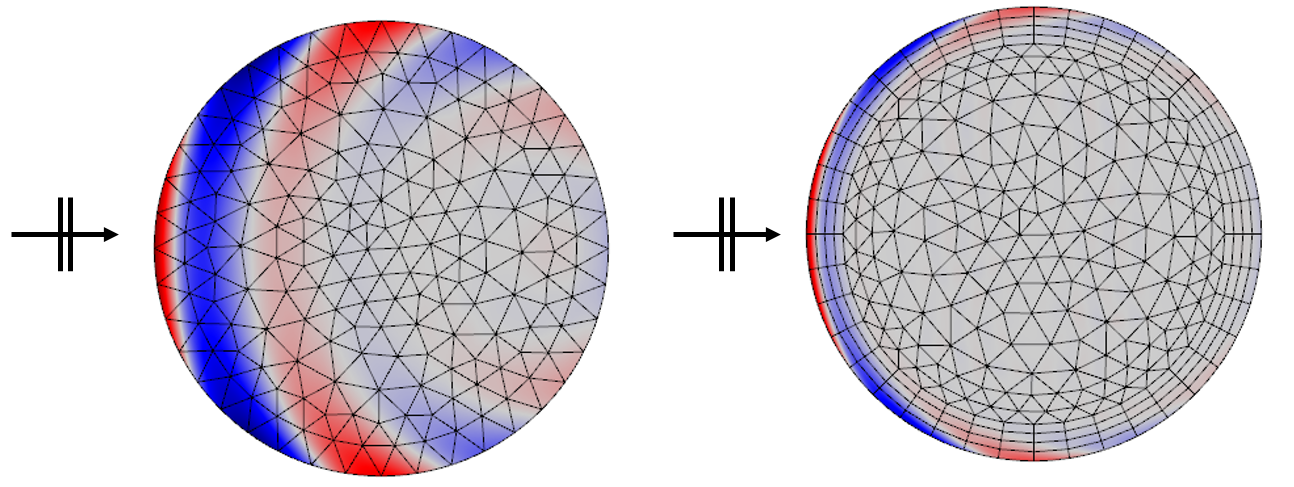

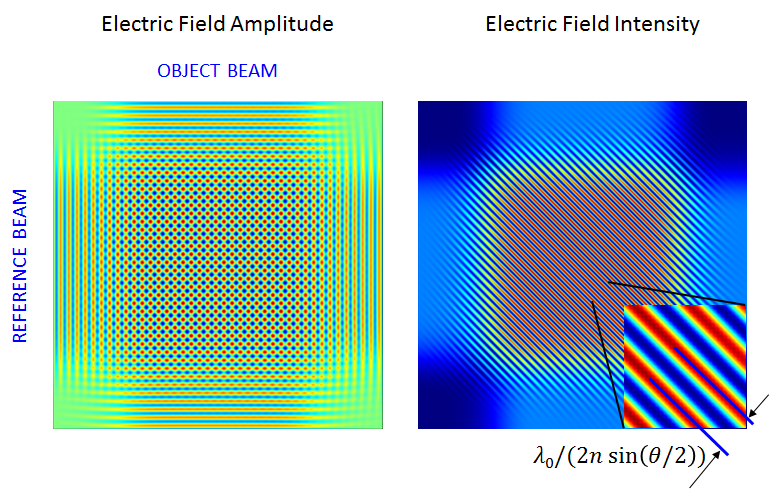

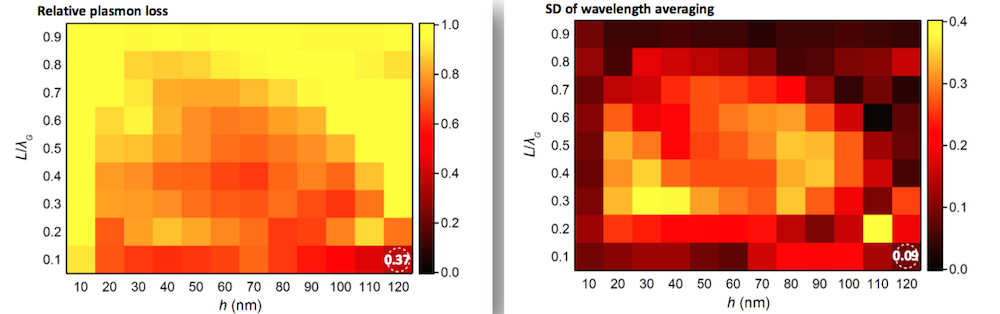

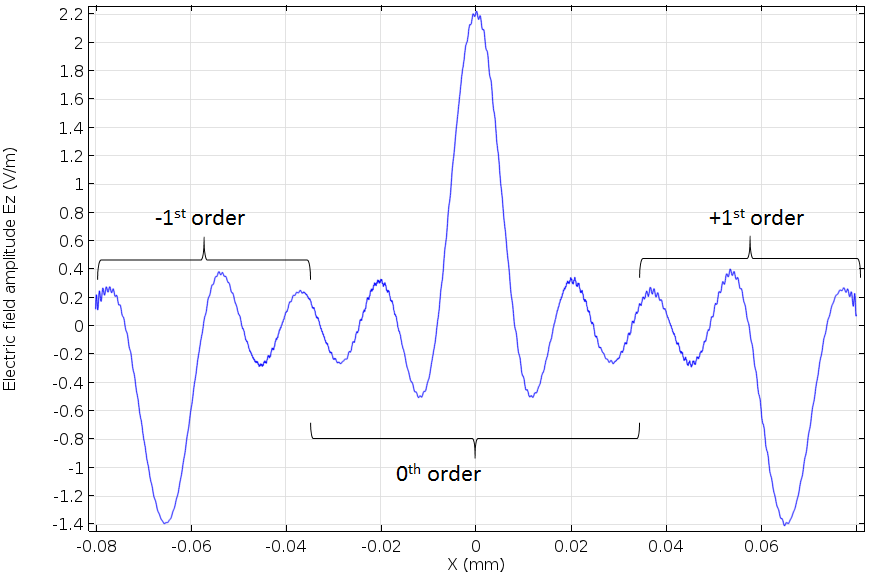

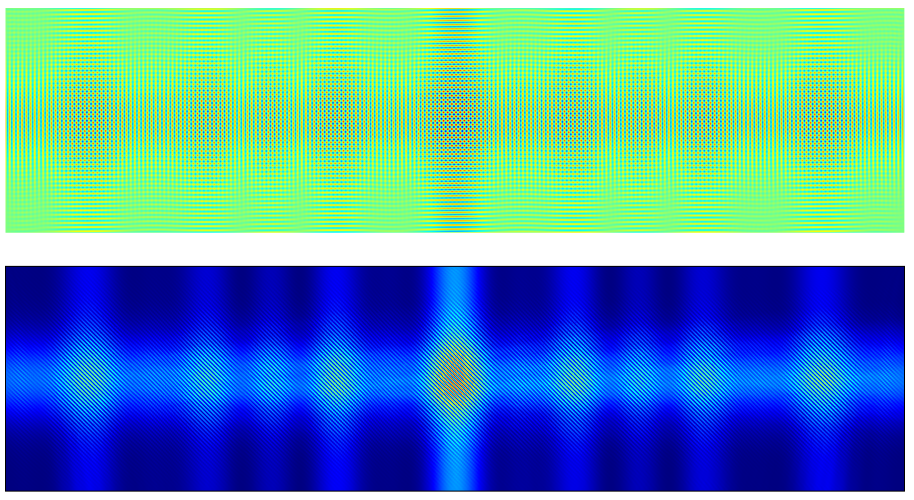

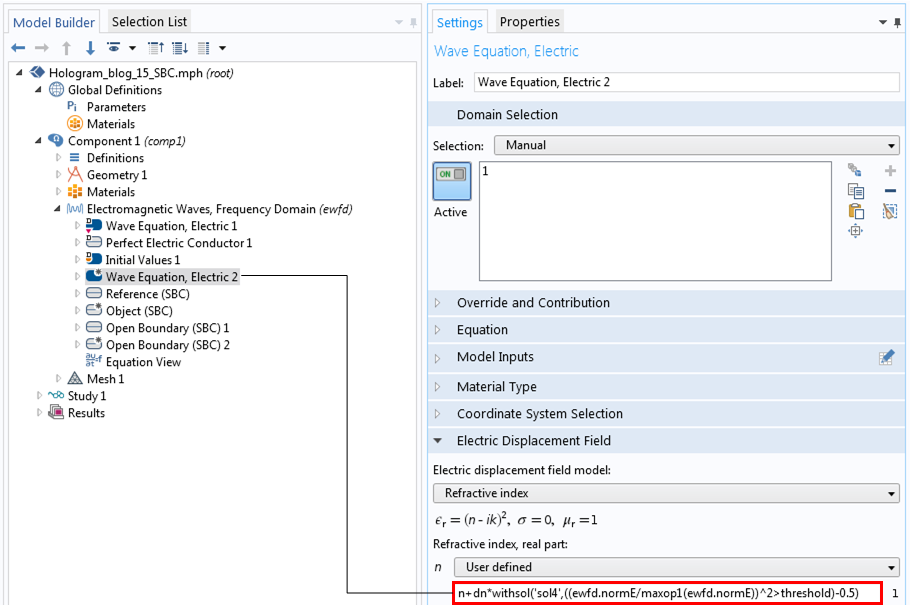

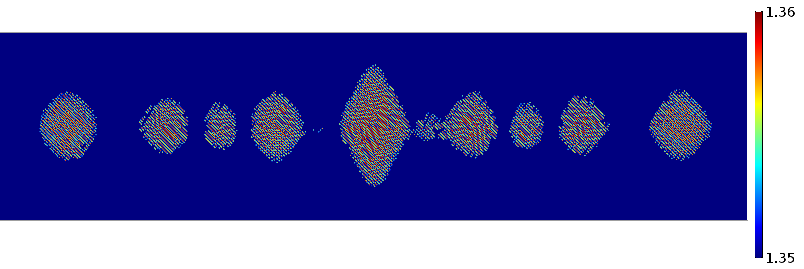

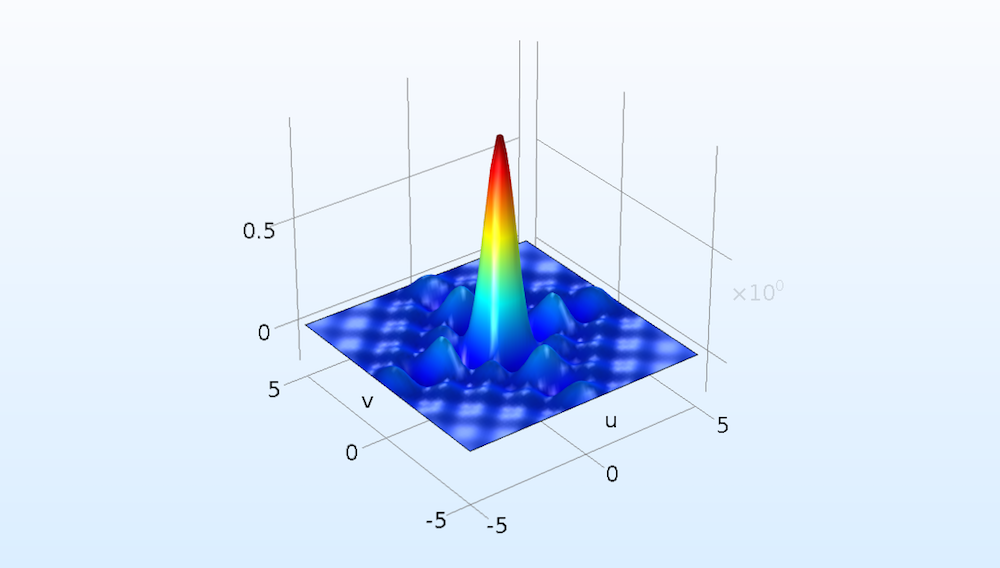

Defining the Diffraction Orders

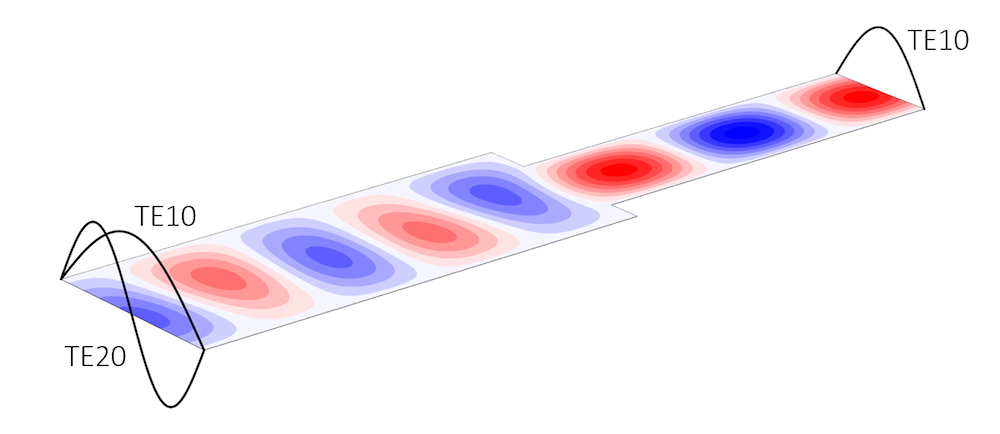

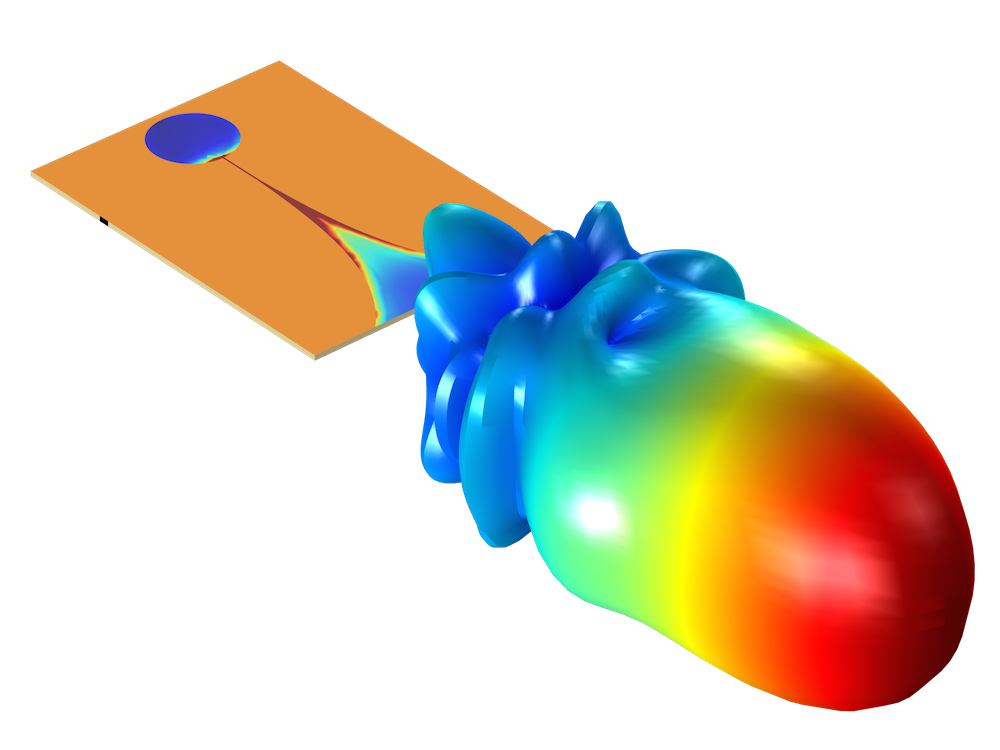

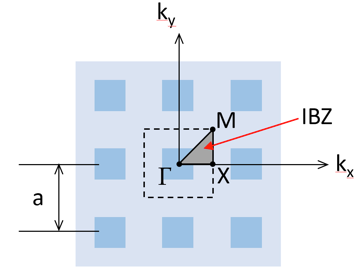

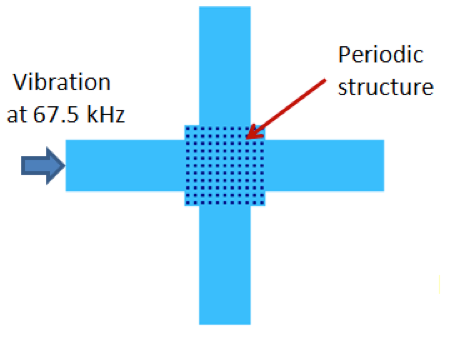

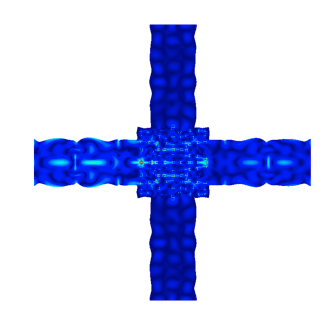

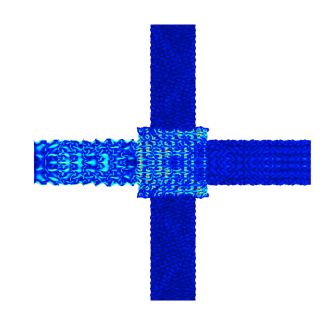

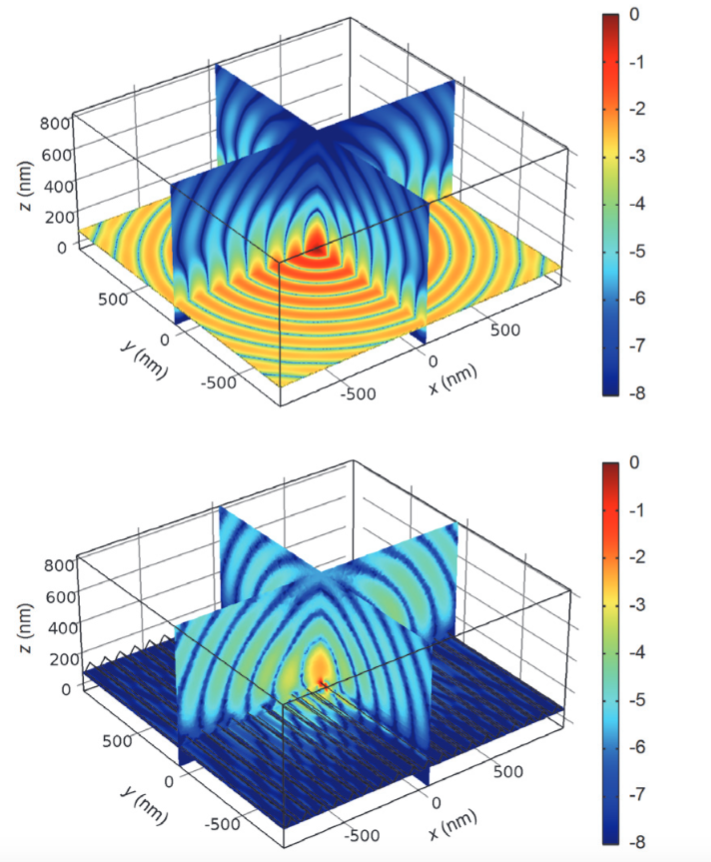

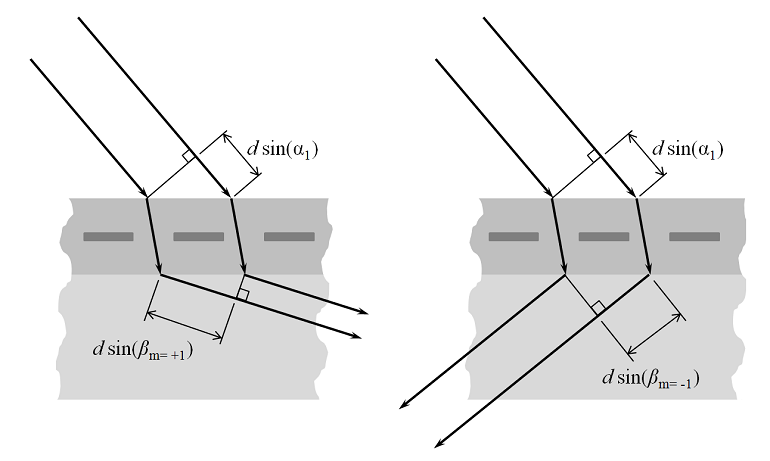

Next, let’s examine what happens when we introduce a structure with periodicity into the modeling domain. Consider a plane wave with \alpha_1, \alpha_2 \ne 0 incident upon a periodic structure as shown below. If the wavelength is sufficiently short compared to the grating spacing, one or several diffraction orders can be present. To understand these diffraction orders, we must look at the plane defined by the \bf{n} and \bf{k} vectors as well as in the plane defined by the \bf{n} and \bf{k \times n} vectors.

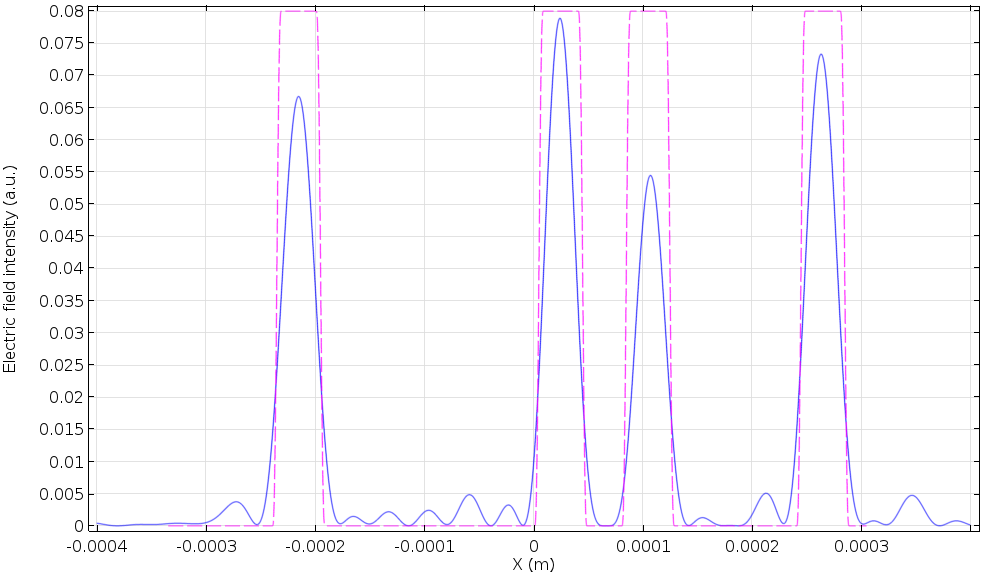

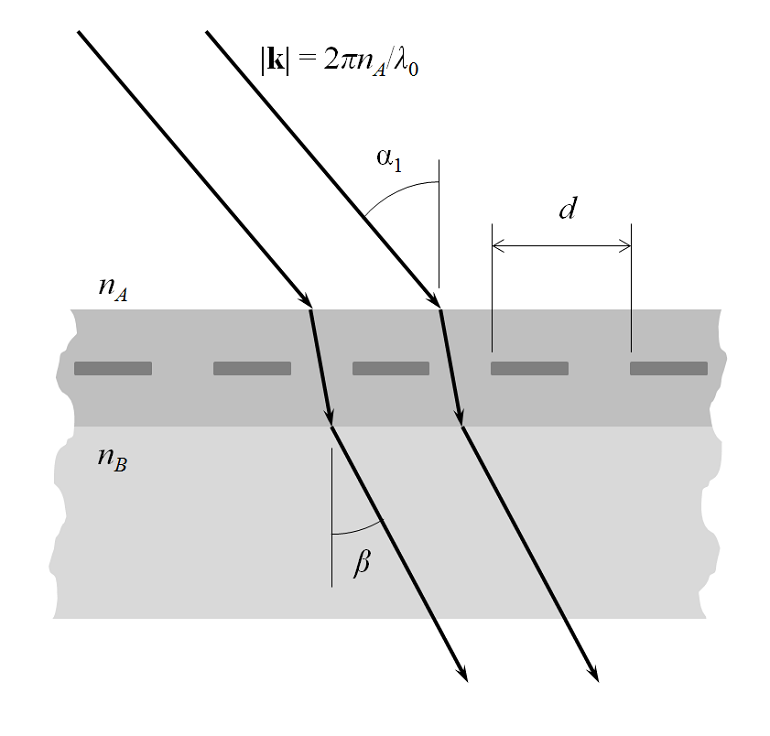

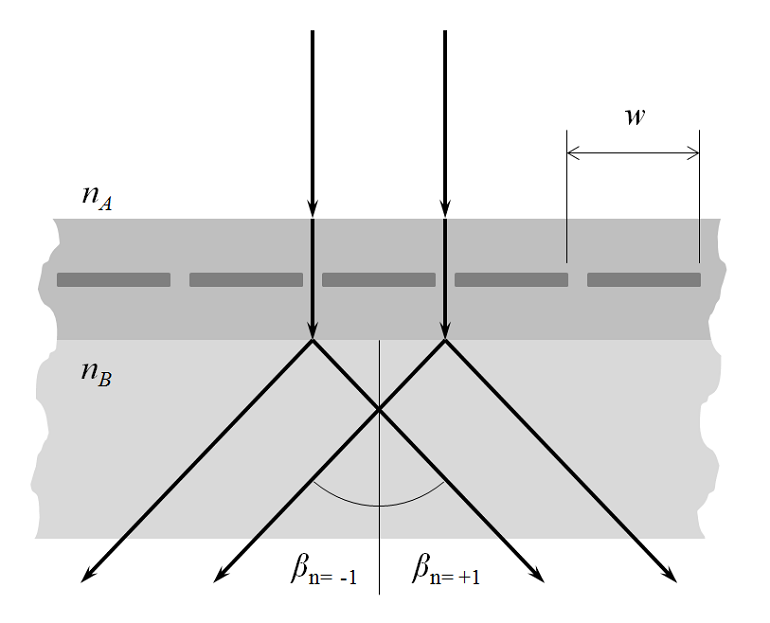

First, looking normal to the plane defined by \bf{n} and \bf{k}, we see that there can be a transmitted 0th order mode with direction defined by Snell’s law as described above. There is also a 0th order reflected component. There also may be some absorption in the structure, but that is not pictured here. The figure below shows only the 0th order transmitted mode. The spacing, d, is the periodicity in the plane defined by the \bf{n} and \bf{k} vectors.

For short enough wavelengths, there can also be higher-order diffracted modes. These are shown in the figure below, for the m=\pm1 cases.

The condition for the existence of these modes is that:

for: m=0,\pm 1, \pm 2,…

For m=0 , this reduces to Snell’s law, as above. For \beta_{m\ne0}, if the difference in path lengths equals an integer number of wavelengths in vacuum, then there is constructive interference and a beam of order m is diffracted by angle \beta_{m}. Note that there need not be equal numbers of positive and negative m-orders.

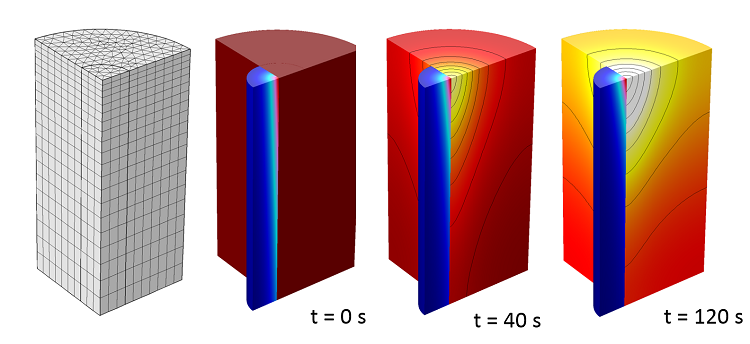

Next, we look along the plane defined by the \bf{n} and \bf{k} vectors. That is, we rotate our viewpoint around the z-axis such that the incident wavevector appears to be coming in normally to the surface. The diffraction into this plane are indexed as the n-order beams. Note that the periodic spacing, w, will be different in this plane and that there will always be equal numbers of positive and negative n-orders.

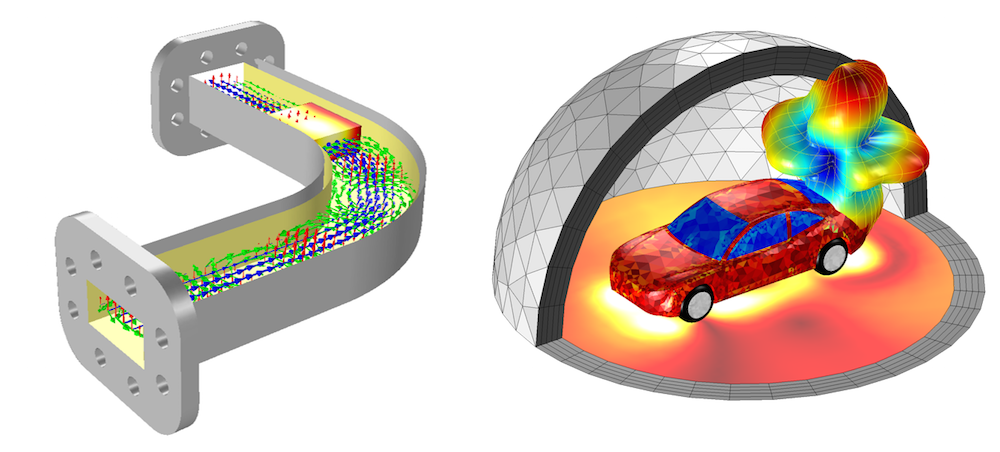

COMSOL will automatically compute these m,n \ne 0 order modes during the set-up of a Periodic Port and define listener ports so that it is possible to evaluate how much energy gets diffracted into each mode.

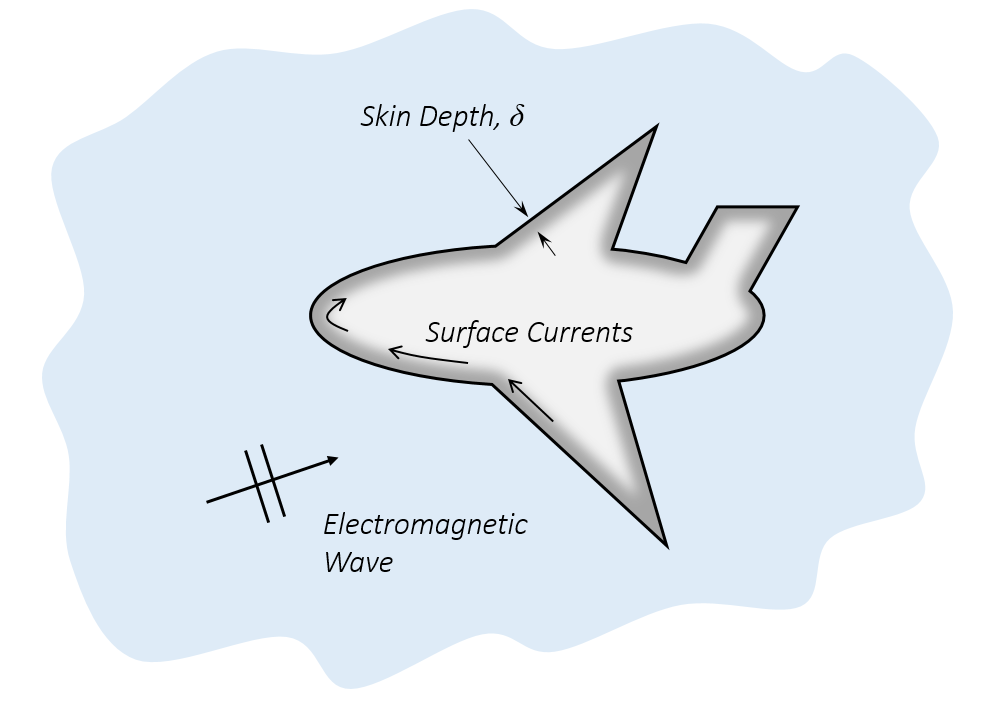

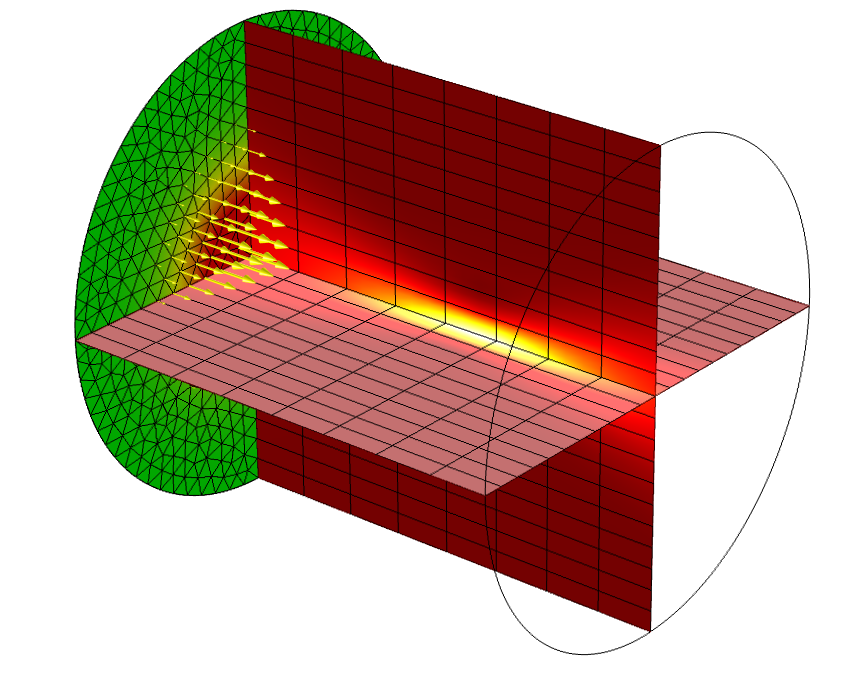

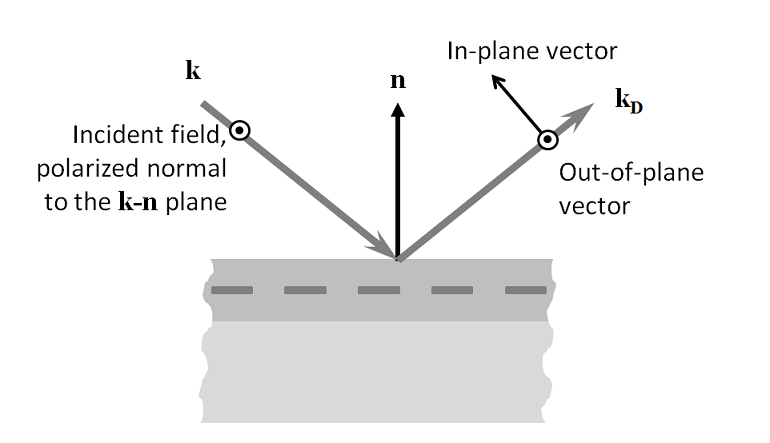

Last, we must consider that the wave may experience a rotation of its polarization as it gets diffracted. Thus, each diffracted order consists of two orthogonal polarizations, the In-plane vector and Out-of-plane vector components. Looking at the plane defined by \bf{n} and the diffracted wavevector \bf{k_D}, the diffracted field can have two components. The Out-of-plane vector component is the diffracted beam that is polarized out of the plane of diffraction (the plane defined by \bf{n} and \bf{k}), while the In-plane vector component has the orthogonal polarization. Thus, if the In-plane vector component is non-zero for a particular diffraction order, this means that the incoming wave experiences a rotation of polarization as it is diffracted. Similar definitions hold for the n \ne 0 order modes.

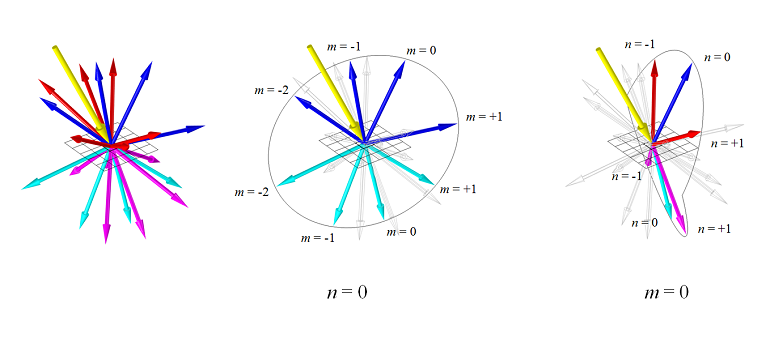

Consider a periodic structure on a dielectric substrate. As the incident beam comes in at \alpha_1, \alpha_2 \ne 0 and there are higher diffracted orders, the visualization of all of the diffracted orders can become quite involved. In the figure below, the incoming plane wave direction is shown as a yellow vector. The n=0 diffracted orders are shown as blue arrows for diffraction in the positive z-direction and cyan arrows for diffraction into the negative z-direction. Diffraction into the n \ne 0 order modes are shown as red and magenta for the positive and negative directions. There can be diffraction into each of these directions and the diffracted wave can be polarized either in or out of the plane of diffraction. The plane of diffraction itself is visualized as a circular arc. Note that the plane of diffraction for the n \ne 0 modes is different in the positive and negative z-direction.

All of the ports are automatically set up when defining a periodic structure in 3D. They capture these various diffracted orders and can compute the fields and relative phase in each order. Understanding the meaning and interpretation of these ports is helpful when modeling periodic structures.